Removed by mod

Absolutely human, i even removed the context text and it didn’t even flinch.

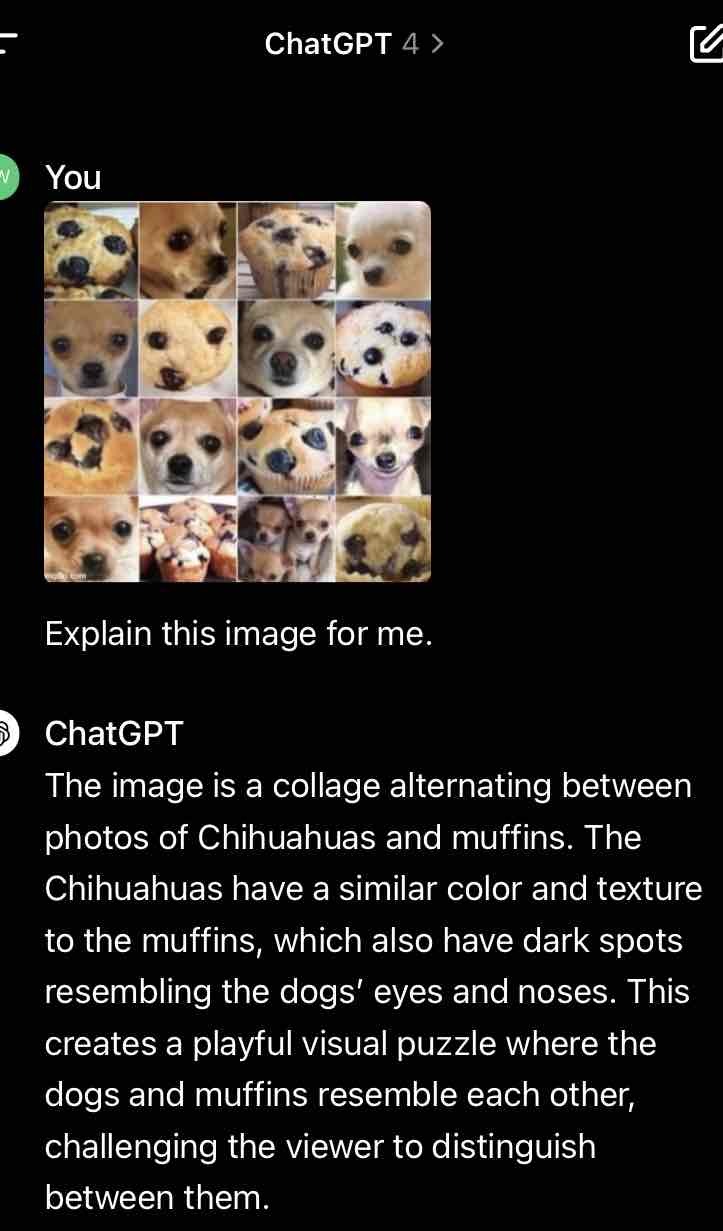

“Similar color” yep seems right, “…and texture” wait what?

idk what kind of muffin they are feeding this AI but sure is a hairy one

Cromch

You don’t know until you try.

Wow, that’s a little too impressive. I’m guessing that image was probably in its training set (or each individual image). There are open training sets with adversarial images, and these images may have come from them. Every time I’ve tried to use ChatGPT with images it has kinda failed (on electronic schematics, plant classification, images of complex math equations, etc). I’m kind of surprised OpenAI doesn’t just offload some tasks to purpose-built models (an OCR or a classification model like inaturalist’s would’ve performed better in some of my tests).

This exact image (without the caption-header of course) was on one of the slides for one of the machine-learning related courses at my college, so I assume it’s definitely out there somewhere and also was likely part of the training sets used by OpenAI. Also, the image in those slides has a different watermark at the bottom left, so it’s fair to assume it’s made its rounds.

Contradictory to this post, it was used as an example for a problem that machine learning can solve far better than any algorithms humans would come up with.

Just take a bite out of each. You know you got the right one when its starts yelping.

Did people make you? If so, yes!

wow!! such pretty orange houseplants

Ah yes, I grow these orange houseplants every year. Visitors definitely admire this species of lovely orange houseplant 😉

Unfortunately, AI is quickly becoming better at this kind of thing than average humans are. And the internet is doomed for sure as a result.

We’re gonna be swimming in a sea of AI generated, convincing half truths and lies.

Hot take: AI good. Every mentioned problem with AI actually stems from capitalism.

I do agree on the whole, It’s the next phase of automation. The real problem stems from the fact that we hold onto the system where a tiny handful of people get the full benefit of the productivity, while the others are paid in time incriments which value goes down with demand, so as more jobs are automated or assisted (to allow more work with less people), supply demand devalues the labor.

This is why I support worker ownership and cooperatives rather than corporate ownership. I think we need to shift towards a Mutualist economy rather than a Capitalist one.

No. It stems from closed source software. True, however, that stems from capitalism. Without that we’d be good friends with AI and fix all the obvious problems we caused. Then do like Bill Hicks said and explore space.

Open source AI can still be problematic under capitalism. It can still be developed to disproportionally favor the ruling class and used to disproportionally benefit them

I’m so proud of u my friend

facepalm

It’s the ONLY method to deal with Closed Source. It can be used to end capitalism. It can be used to end money entirely.

Do it ALL FOSS now, or else…EOTW. End of story. Literally.

Making it FOSS wouldn’t solve the problem, because FOSS tools can still be used by capitalists to displace workers and erode worker bargaining power.

This is true of pretty much EVERY tool, but never has a tool had the potential to negatively impact so many in such a diversity of roles.

So again, the problem isn’t closed source, the problem is capitalism. If you fixed the problems of capitalism, then all software tools would naturally be FOSS, but that’s a product of fixing the problems, not the mechanism to fix the problems.

Do you even know what FOSS is?

Free Open Source Software. Do you even know what capitalism is?

i know what they say it is. i also know it really is.

Yep, it’s always the fault of the people using it for bad deeds.

It’s not about using it for bad deeds as any other tool like a kitchen knife can be used the same way. It’s more to the necessity to work in order to live.

I agree with you and don’t forget that Capitalism shouldn’t be reformed as Social Democracy claims but it must be destroyed from head to toes

That’s a view I have for many things. The desire and possibility of, getting more money always distorts and corrupts. It makes pretty much everything worse by rewarding deception, externalised waste, and exploitation.

I like the way one of those pictures is of a slightly deformed dog. Figure THESE out, AI!

(No please don’t. We don’t want AI to figure out any more than it already has.)

I only see deformed dogs in the non-muffin ones.

Are you sure those aren’t the muffins? :P

That’s because shitty people love their dogs deformed, unable to breathe, and with high vet bills. They think it’s cute or purebred.

You can hear some dogs having trouble to breathe. How can people like something like that? Disgusting.

12 is definitely abnormally shaped.

yup thatsa the one ima talkin about

I’m pretty sure gpt4 can do this

Yep, 0 errors. Couldn’t find a video I saw

I hope each of them is named muffin

Or the next Google CAPTCHA after one of the sneaker-scalpers trains their own.

I want a War on AI so bad

I don’t! I want people who are concerned about the misuse of AI, particularly by corporations and world governments, to learn how to use AI to fight back against our oppressors or at least make AI-powered technologies that are helpful for common people, and to archive how it works, particularly how it fails.

“Technology bad! Oh no muh manual labor job!” -everyone throughout history

AI isn’t the issue, it’s the fact that we don’t have any kind of system set up to handle the eventual takeover of the economy by robotics and AI.

Nah, coming from data and signal processing fields, I think AI is overused by ppl that are incompetent. There are much more elegant, measurable and efficient ways of signal processing.

Anybody can use AI, okay. But is still a shitty solution.

How is a technology that depends on the competence of the user to blame when the user is incompetent?

AI works like a black box for regular users and top AI researchers. That’s why is not a good design. As a researcher you cannot obtain direct information of what the AI model is doing inside. Just results.

Idk why you all know AI so much now. You know that AI existed since the 80’s right? xD.

Now everybody fan of it because we can waste ton of resources to run this technological abomination that’s basically the difference between now and then.

The cylons won’t take kindly to this…

Mmmm blueberry muffins…

Click all the images containing something sweet.

All of them.

Artists are now fighting back against AI stealing their works

Is that the real war on AI?

Human capitalists fighting for their profit VS other Humans that fight for some rights of theirs.

The age of digital war is really settling in now that even protesting is something we do with digital tool.

Glaze has been defeated a long while ago.

is it worth it?

Tried it on chat gpt 4, here what it thinks:

This image is a collage that alternates between photos of Chihuahuas and blueberry muffins. The arrangement is such that it plays on the visual similarities between the two, with the muffins and parts of the Chihuahuas (likely their faces) mimicking each other in color and texture. This creates a humorous effect, as it can be initially challenging to differentiate between the muffins and the dogs.

Starting from the top left corner and moving left to right, row by row:

- Blueberry muffin with spots resembling a dog’s eyes and nose.

- Face of a Chihuahua with a similar coloration to the muffin.

- Blueberry muffin resembling the face of a Chihuahua.

- Chihuahua face with a light fur color matching the muffin’s surface.

- Chihuahua face with dark eyes and nose similar to blueberry spots.

- Muffin with a pattern that resembles a Chihuahua’s facial features.

- Chihuahua with an expression and coloring that echoes the appearance of a muffin.

- Muffin with blueberries and coloring that looks like a Chihuahua’s face.

- Chihuahua with a facial expression and fur colors that mimic a muffin’s texture.

- Muffin with blueberries mimicking the eyes and nose of a Chihuahua.

- Chihuahua with features that resemble the spots on a muffin.

- Muffin resembling a Chihuahua’s face in color and texture.

- Close-up of a Chihuahua’s face with colors similar to a blueberry muffin.

- Muffin with a pattern of blueberries resembling a Chihuahua’s face.

- Chihuahua looking directly at the camera, with fur colors like a muffin.

- Two Chihuahuas close together, with their heads resembling muffin tops.

Conclusion it sometimes gets fooled :)

It’s likely seen the image before. Try randomising the image, reversing some of them, altering the gamma, and adding some noise. See how it does then.

It did make errors, also the training of it is old it’s unlikely that it seen the images