“Gradient descent” ≈ on a “hilly” (mathematical) surface, try to find the lowest point by finding the lowest point near an initial guess. “Gradient” is basically the steepness, or rate that the thing you’re trying to optimize changes as you move through “space”. The gradient tells you mathematically which direction you need to go to reach the bottom. “Descent” means “try to find the minimum”.

I’m glossing over a lot of details, particularly what a “surface” actually means in the high dimensional spaces that AI uses, but a lot of problems in mathematical optimization are solved like this. And one of the steps in training an AI agent is to do an optimization, which often does use a gradient descent algorithm. That being said, not every process that uses gradient descent is necessarily AI or even machine learning. I’m actually taking a course this semester where a bunch of my professor’s research is in optimization algorithms that don’t use a gradient descent!

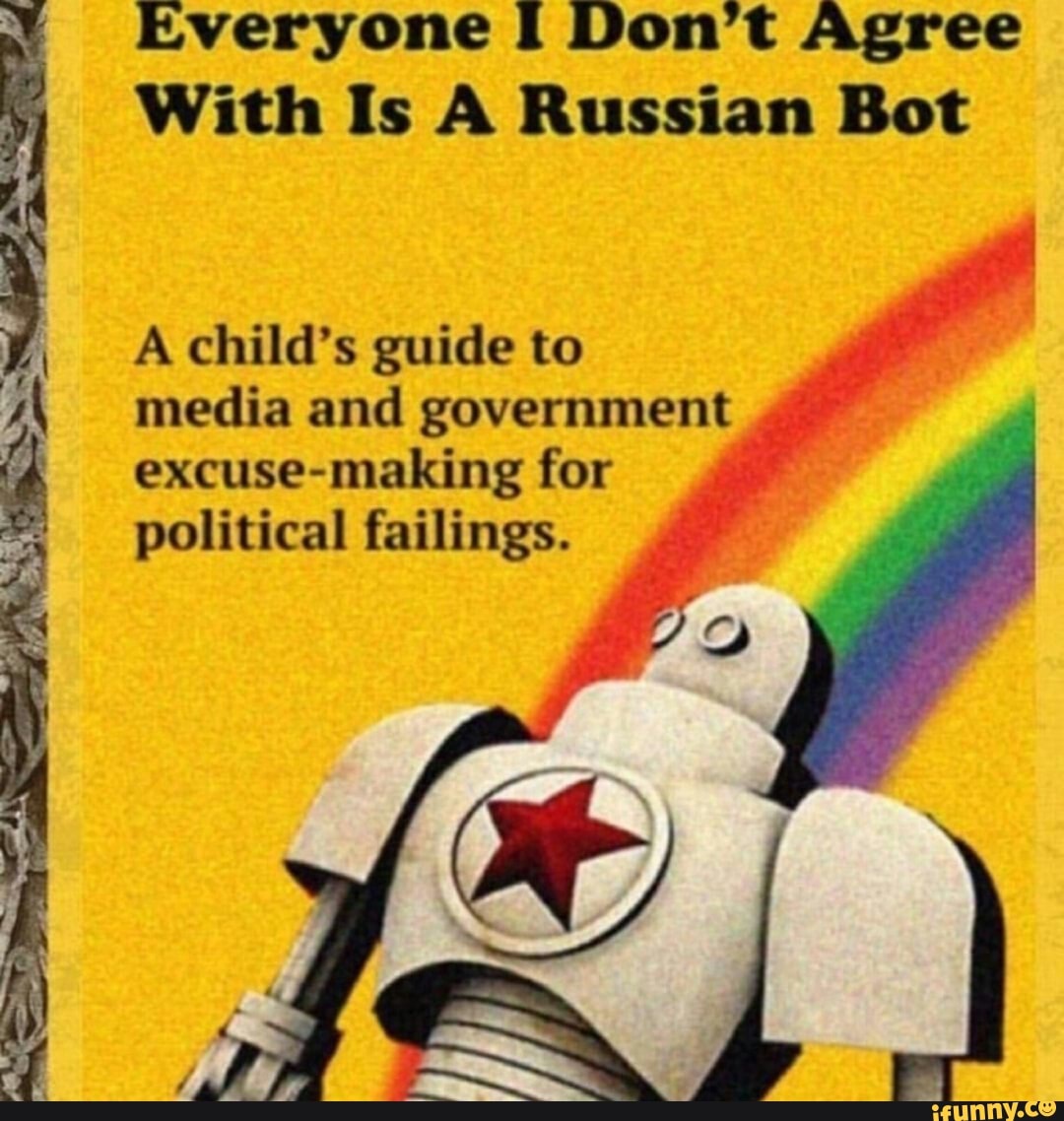

USA sux